Quoted from: https://digital.library.adelaide.edu.au/dspace/bitstream/2440/15227/1/138.pdf

LDA is closely related to analysis of variance (ANOVA) and regression analysis, which also attempt to express one dependent variable as a linear combination of other features or measurements. However, ANOVA uses categorical independent variables and a continuous dependent variable, whereas discriminant analysis has continuous independent variables and a categorical dependent variable (i.e. the class label). Logistic regression and probit regression are more similar to LDA than ANOVA is, as they also explain a categorical variable by the values of continuous independent variables. These other methods are preferable in applications where it is not reasonable to assume that the independent variables are normally distributed, which is a fundamental assumption of the LDA method.

LDA is also closely related to principal component analysis (PCA) and factor analysis in that they both look for linear combinations of variables which best explain the data. LDA explicitly attempts to model the difference between the classes of data. PCA, in contrast, does not take into account any difference in class, and factor analysis builds the feature combinations based on differences rather than similarities. Discriminant analysis is also different from factor analysis in that it is not an interdependence technique: a distinction between independent variables and dependent variables (also called criterion variables) must be made.

LDA works when the measurements made on independent variables for each observation are continuous quantities. When dealing with categorical independent variables, the equivalent technique is discriminant correspondence analysis.

Discriminant analysis is used when groups are known a priori (unlike in cluster analysis). Each case must have a score on one or more quantitative predictor measures, and a score on a group measure. In simple terms, discriminant function analysis is classification - the act of distributing things into groups, classes or categories of the same type.

LDA for two classes

Consider a set of observations {\displaystyle {\vec {x}}} (also called features, attributes, variables or measurements) for each sample of an object or event with known class {\displaystyle y}

(also called features, attributes, variables or measurements) for each sample of an object or event with known class {\displaystyle y} . This set of samples is called the training set. The classification problem is then to find a good predictor for the class {\displaystyle y}

. This set of samples is called the training set. The classification problem is then to find a good predictor for the class {\displaystyle y} of any sample of the same distribution (not necessarily from the training set) given only an observation {\displaystyle {\vec {x}}}

of any sample of the same distribution (not necessarily from the training set) given only an observation {\displaystyle {\vec {x}}} .

.

LDA approaches the problem by assuming that the conditional probability density functions {\displaystyle p({\vec {x}}|y=0)} and {\displaystyle p({\vec {x}}|y=1)}

and {\displaystyle p({\vec {x}}|y=1)} are both normally distributed with mean and covariance parameters {\displaystyle \left({\vec {\mu }}_{0},\Sigma _{0}\right)}

are both normally distributed with mean and covariance parameters {\displaystyle \left({\vec {\mu }}_{0},\Sigma _{0}\right)} and {\displaystyle \left({\vec {\mu }}_{1},\Sigma _{1}\right)}

and {\displaystyle \left({\vec {\mu }}_{1},\Sigma _{1}\right)} , respectively. Under this assumption, the Bayes optimal solution is to predict points as being from the second class if the log of the likelihood ratios is bigger than some threshold T, so that:

, respectively. Under this assumption, the Bayes optimal solution is to predict points as being from the second class if the log of the likelihood ratios is bigger than some threshold T, so that:

- {\displaystyle ({\vec {x}}-{\vec {\mu }}_{0})^{T}\Sigma _{0}^{-1}({\vec {x}}-{\vec {\mu }}_{0})+\ln |\Sigma _{0}|-({\vec {x}}-{\vec {\mu }}_{1})^{T}\Sigma _{1}^{-1}({\vec {x}}-{\vec {\mu }}_{1})-\ln |\Sigma _{1}|\ >\ T}

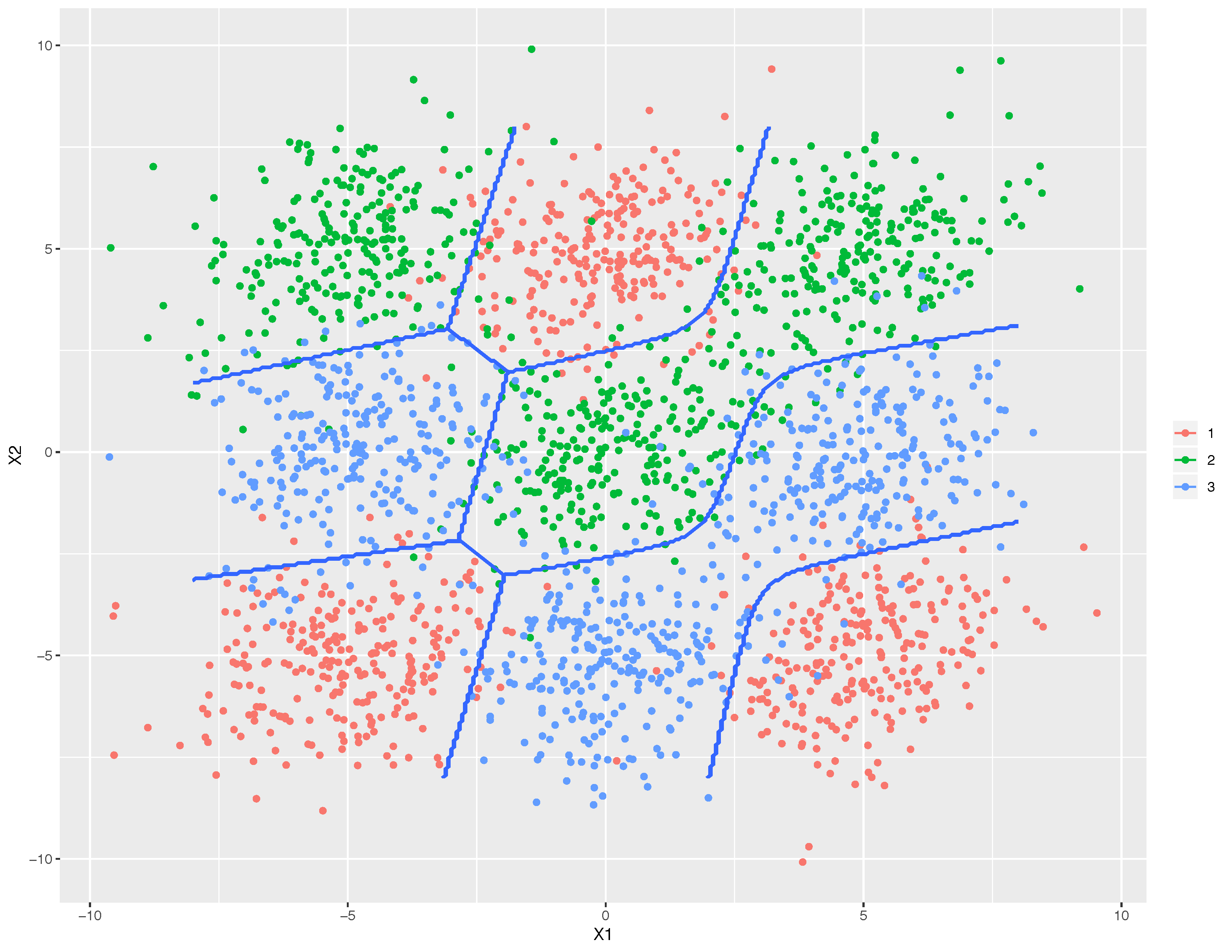

Without any further assumptions, the resulting classifier is referred to as QDA (quadratic discriminant analysis).

LDA instead makes the additional simplifying homoscedasticity assumption (i.e. that the class covariances are identical, so {\displaystyle \Sigma _{0}=\Sigma _{1}=\Sigma } ) and that the covariances have full rank. In this case, several terms cancel:

) and that the covariances have full rank. In this case, several terms cancel:

- {\displaystyle {\vec {x}}^{T}\Sigma _{0}^{-1}{\vec {x}}={\vec {x}}^{T}\Sigma _{1}^{-1}{\vec {x}}}

- {\displaystyle {\vec {x}}^{T}{\Sigma _{i}}^{-1}{\vec {\mu }}_{i}={{\vec {\mu }}_{i}}^{T}{\Sigma _{i}}^{-1}{\vec {x}}}

because {\displaystyle \Sigma _{i}}

because {\displaystyle \Sigma _{i}} is Hermitian and the above decision criterion becomes a threshold on the dot product

is Hermitian and the above decision criterion becomes a threshold on the dot product

- {\displaystyle {\vec {w}}\cdot {\vec {x}}>c}

for some threshold constant c, where

- {\displaystyle {\vec {w}}=\Sigma ^{-1}({\vec {\mu }}_{1}-{\vec {\mu }}_{0})}

- {\displaystyle c={\vec {w}}\cdot {\frac {1}{2}}({\vec {\mu }}_{1}+{\vec {\mu }}_{0})}

This means that the criterion of an input {\displaystyle {\vec {x}}} being in a class {\displaystyle y}

being in a class {\displaystyle y} is purely a function of this linear combination of the known observations.

is purely a function of this linear combination of the known observations.

It is often useful to see this conclusion in geometrical terms: the criterion of an input {\displaystyle {\vec {x}}} being in a class {\displaystyle y}

being in a class {\displaystyle y} is purely a function of projection of multidimensional-space point {\displaystyle {\vec {x}}}

is purely a function of projection of multidimensional-space point {\displaystyle {\vec {x}}} onto vector {\displaystyle {\vec {w}}}

onto vector {\displaystyle {\vec {w}}} (thus, we only consider its direction). In other words, the observation belongs to {\displaystyle y}

(thus, we only consider its direction). In other words, the observation belongs to {\displaystyle y} if corresponding {\displaystyle {\vec {x}}}

if corresponding {\displaystyle {\vec {x}}} is located on a certain side of a hyperplane perpendicular to {\displaystyle {\vec {w}}}

is located on a certain side of a hyperplane perpendicular to {\displaystyle {\vec {w}}} . The location of the plane is defined by the threshold c.

. The location of the plane is defined by the threshold c.